Apple Vision Pro: Is This Meta’s iPhone Moment?

There is a scene in the recently released movie Blackberry in which the employees at Research in Motion, maker of the eponymous smartphone, are watching Steve Jobs deliver his iPhone keynote in 2007. They quickly realize that the iPhone is the future.

Jobs opened the keynote noting he had been waiting two and a half years for this moment. He noted that “Every once in a while, a revolutionary product comes along that changes everything…” and goes on to talk about the Mac in 1984 and the iPod in 2001.

Then, Jobs said that on that day in 2007, he was going to introduce three new such devices: a widescreen iPod with touch controls, a revolutionary mobile phone, and a breakthrough internet communications device.

But these weren’t really three separate devices. “This is one device. And we are calling it… iPhone.”

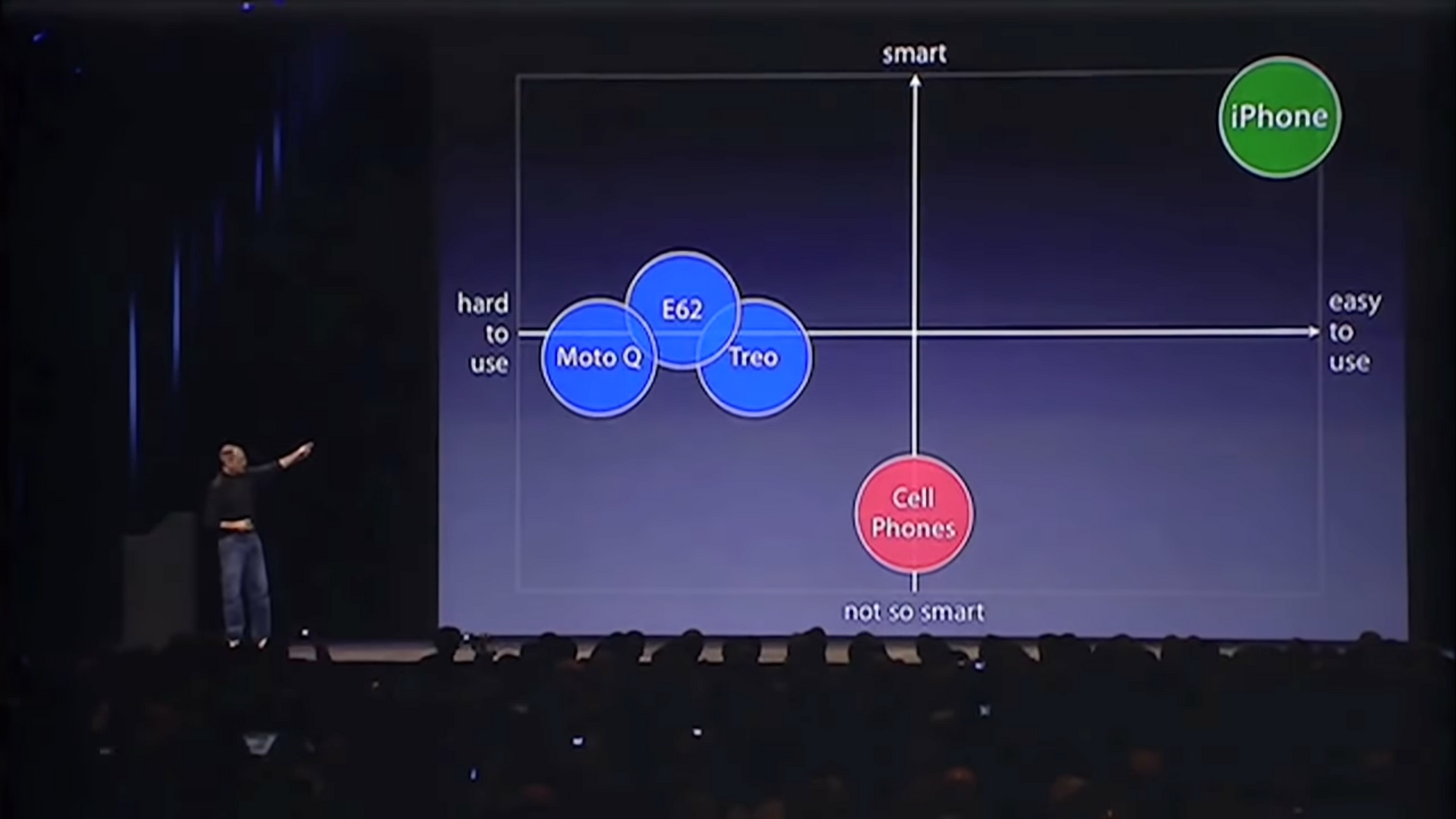

Jobs noted that the existing competition—Blackberry, Nokia, Motorola and others—were on a spectrum of not so smart, and hard to use. Apple’s goal was to build something actually smart and easy to use:

The rest, as they say, is history.

Watching the June 5, 2023 keynote (the Vision Pro segment starts at 1:20:50), now with Tim Cook, it felt like history repeating itself.

Apple Vision Pro is a new computing platform. The Mac introduced personal computing. The iPhone, mobile computing. Vision Pro creates spatial computing.

Towards the end of the keynote, Mike Rockwell noted, “If you purchased a state-of-the-art TV, surround sound system, powerful computer with multiple high definition displays, high-end camera and more, you still would not have come close to what Vision Pro delivers.”

More than three devices in one. Clearly, this is the future.

The big question in my mind was:

The Meta Quest team watching this keynote right now… are they like those Research in Motion employees back in 2007? In other words, is this Meta’s iPhone moment?

The question is important because Mark Zuckerberg spent $2 billion to acquire Oculus in 2014. The strategic rationale was to control Facebook’s future; no longer would Facebook live under the control of the iOS and Android platforms.

At the time, Zuck wrote a detailed memo laying out the rationale for acquiring Oculus. I wrote about the memo here (that link also has the podcast episode where you listen to the memo if you prefer).

Since acquiring Oculus, Zuckerberg has rebranded his company Meta and spent at least an additional $45 billion on the Reality Labs division to develop that business.

Forty-five billion is the cumulative operating loss since the division was broken out, including some estimates for the years before 2019. It does not include capital expenditures to support that business, which have also been in the several billions of dollars.

What has all this capital bought Zuckerberg thus far? Not much. Meta Quest and related software sales are a rounding error. Reality Labs is still losing money. It lost $13.7 billion in 2022 and Zuckerberg said he expects Reality Labs operating losses to increase year-over-year in 2023.

For some context on what all this money means, Tesla’s total invested capital thus far is around $30 billion (in terms of capital expenditures). For that, the company generated $14 billion of pre-tax income last year.

In this context, Zuckerberg’s investment in Oculus and Reality Labs looks thus far like a massive misallocation of capital, alongside the $45 billion it spent in share repurchases in 2021 at a price of $330 per share when the company knew it was facing iOS-related headwinds.

We don’t know how much Apple has spent to develop Vision Pro, but it’s most certainly significantly less than what Zuckerberg has spent on Reality Labs.

Vision Pro vs. Meta Quest

The critique of this critique is that these are apples and oranges. The Vision Pro is a high-end, $3,500 device aimed at professional users (thus the name, “Pro”), whereas the Meta Quest 3, at $500, is clearly the mass-market winner.

Not so fast.

I’ve owned every Oculus and Quest device thus far. My first VR experience was in 2017, and I first wrote about it in 2018. I have always been excited about the possibilities of VR (I’m using “VR” very loosely here, whether it’s all-enclosed virtual reality, augmented reality, or a mix, like Apple’s device).

The dream, for me, would be to have a very high-resolution device that could be used for work and education.

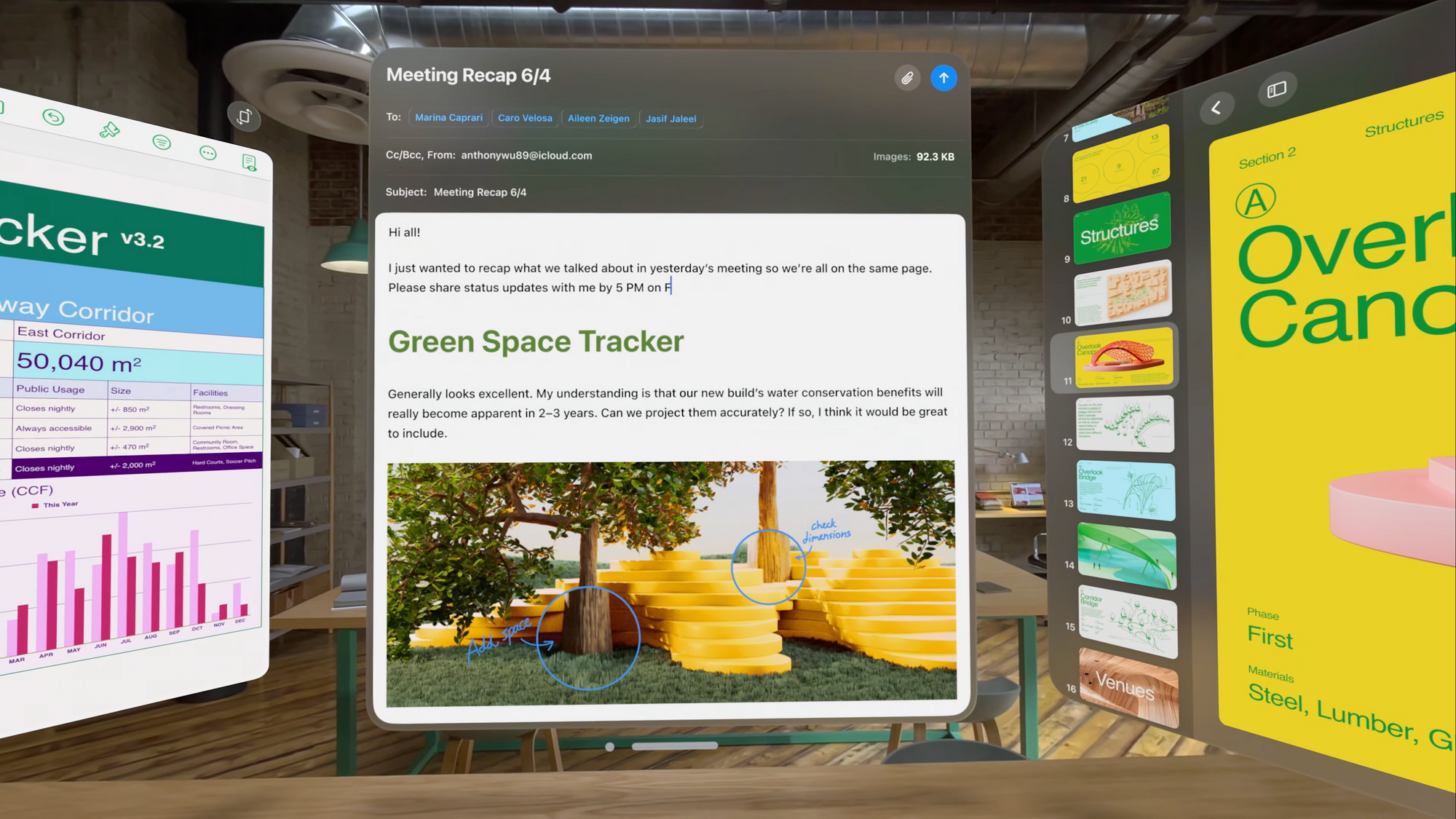

Imagine being able to take your office with you anywhere, and to be able to put up multiple high-resolution screens with any content.

And imagine being able to learn about the human heart—for example—by examining a three-dimensional beating heart and peering inside it.

This vision is exactly what Apple has delivered.

But it’s so much more. On the matrix that Jobs drew for the iPhone, the Vision Pro seems to score very much in the “easy to use” quadrant (I have not tried the Vision Pro but have read the accounts of the few lucky folks who were able to test it for thirty minutes after the Apple keynote, and they are consistent in their awe for the Vision Pro’s ease-of-use).

The Meta Quest relies on hand controllers that are excellent and easy to use. They’re great for gaming, but not for efficiently controlling a work environment. The paradigm is pointing at icons and tapping them, similar to a mouse, but harder to control.

The Vision Pro, with its many inward-facing infrared cameras, has eye tracking precise enough to make it so that all you do is glance at an icon, and it’s selected. Then, by simply touching your fingertips, you “click” on it. Folks who have tried it have called the experience magical. There are good accounts here, here, here, here, here, and here.

Perhaps the most important problem Apple solved is that of bootstrapping an ecosystem. Apple’s Vision Pro, from day one, will have all of users’ existing iPhone and iPad apps; it will fully integrate with iCloud Photos library; it will integrate with Notes and have all the Office 365 apps, and Adobe Lightroom and so on.

A user can even drag a window from a Mac into Vision Pro, and work on that window alongside other apps in the workspace. I run Windows 11 alongside MacOS inside my Mac. This means that I could have my entire office, including Windows 11, inside Vision Pro.

Vision Pro also has, from day one, support from Apple’s vast set of APIs and frameworks (the Vision Pro segment of the Platforms State of the Union is worth watching).

All of this is made possible in part by Apple’s custom silicon, which has benefited from years of iteration. It’s one reason why Apple’s laptops are the most powerful and most energy efficient in the industry.

This contrasts with the Meta Quest, which has none of these things. All of my Meta Quest and Oculus headsets were worn a few times, then have sat in the closet collecting dust. The Meta Quest is incredibly fun and immersive, but the main use case right now is gaming. This is not a frequent or daily use case, at least not for me. And my experience is far from unique, judging by Meta’s disappointment with Quest retention rates.

The Strategic Problem for Meta

All of this poses a huge strategic problem for Meta. It is estimated that about two thirds of the monetization that the company gets on mobile is from iOS, that is, from iPhone users, who tend to be higher income. And only a fraction of Meta’s monetization comes from desktop, so about two thirds of Meta’s revenues are under Apple’s thumb: subject to the whims of Apple’s Store rules and ever-changing privacy efforts that sometimes feel designed to simply hurt Meta.

The whole point of acquiring Oculus for $2 billion, then pouring an additional $45 billion into Reality Labs, was to own the next computing platform. Desktop went to Microsoft, then Apple. Mobile went to iOS and Android, but iOS gets the lion’s share of the profit pool.

After the Vision Pro announcement, it looks to me like visionOS, the operating system for the Vision Pro, will also get the lion’s share of the spatial computing profit pool.

The obvious counterargument is price: at $3,500, who can afford to buy a Vision Pro? This will not be a mass market product.

Technology is, however, massively deflationary. The chips, sensors, cameras, and batteries that are part of the Vision Pro will get better and cheaper. The first iPhone was priced at $599. Inflation-adjusted, that is nearly $900.

Today, you can buy a very capable iPhone SE, which is vastly better than the first iPhone (remember, there wasn’t even an App Store back then), for just $499 in today’s dollars. With Apple’s zero-interest financing, it’s just $17.87 per month for 24 months.

I have zero doubt that Apple wants everyone to be able to afford a spatial computer. And I also have no doubt that Apple will be able to introduce less expensive models over time.

We are in the Apple I era of spatial computing. That model was introduced at $666.66 in 1976, which is $3,554 in today’s dollars. Like the Vision Pro.

Feels like history repeating itself.